I spend a lot of time traveling for business. Like many people, I’ll glance into the cockpit as I board a plane. Something is reassuring about seeing the experienced pilots go through their pre-flight checks.

Now, imagine peeking inside and seeing no pilots because AI will be flying you to your destination. That will probably be a flight you won’t be taking. You likely would have the same hesitation about trusting AI with your healthcare or financial affairs.

We’re warming to the idea of letting AI agents handle simple tasks for us, like a chatbot or intelligent assistant. But complicated ones where our welfare is at stake? We’re not there yet. That’s because the more complexity, the less trust.

What Is Agency?

This gap in comfort level is the core concept of agency. It’s about the different levels of autonomy we’re willing to grant to AI. The discussion isn’t really about technology at all. It’s the innate human psychology idea of needing to be in control.

So, what do we mean when talking about agency? I describe it as the level of determinism associated with an AI agent’s operating rules. Or put another way, it’s how much independence we’re willing to grant agents to do our bidding for achieving goals. I used a studio mixing board analogy in my first post of this series. We can modulate agent behavior like engineers fine-tune music by amplifying or diminishing sound.

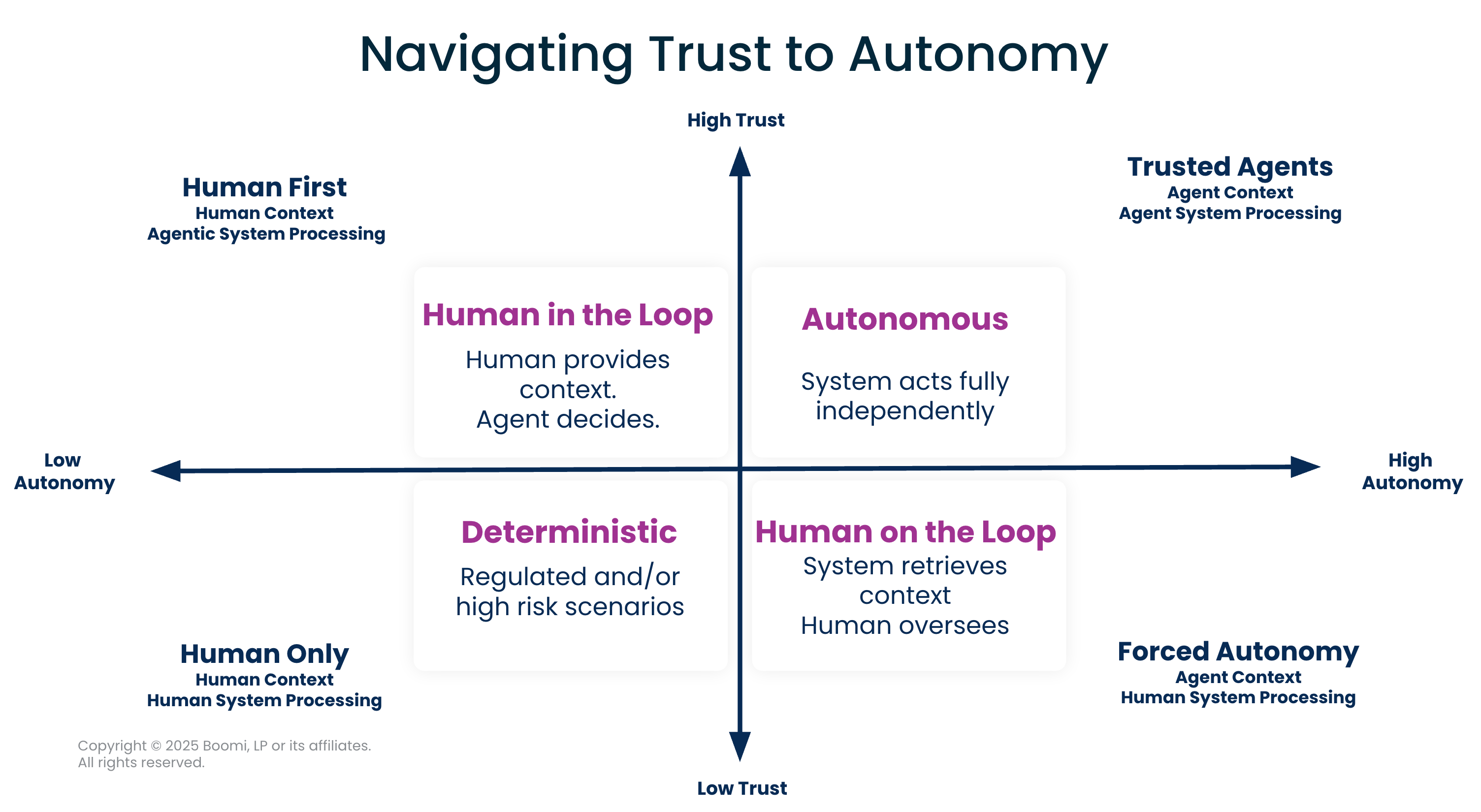

At Boomi, we consider the varying degrees of autonomy in four distinct modalities. The scale begins with systems that are mostly human-controlled and progresses to fully autonomous agents.

- AI Assist. Agents respond to human commands (prompts). This is a low autonomy level because people mostly run the show. This area limits AI use and relies upon human action.

- Humans in the Loop. This describes semi-autonomous processes in which agents actively perform tasks within the scope of their programming. But humans are the ultimate decision-makers. Humans are involved in the creative process via prompts, but AI or the agentic system has the final say.

- Humans on the Loop. Here, agents are busy as they complete their assigned tasks. Humans are involved sporadically, as needed – almost like first-responders – to solve problems and check work. The heavy lifting is done by the agentic system, with humans having the “sign off.”

- Autonomous. Welcome to your agent assistant! At this level, trust is high, and agents demonstrate their independence with little or no human involvement.

We’ve had discussions with technology leaders, where we situated these categories into a traditional quadrant format that looks like this:

(H – Human, A – Agent, I – Input, V – Validation)

How It Works

Here’s an example of how that matrix might look with a common challenge: email overload. Let’s say you want to assign an agent to manage your in and out boxes. The differences might look like this:

- With AI Assist, you write responses to all your emails, and AI offers suggestions for improvement. Humans determine how much assistance they want from AI while they are working.

- With Humans in the Loop, the agent crafts the first draft of emails, which you edit and tweak before sending.

- With Humans on the Loop, the agent writes and sends emails automatically, only flagging ones for review that you specifically instructed not to send without your approval.

- With Autonomous, you entrust the agent to act as your personal assistant, responding to all emails on your behalf without your involvement.

I know the last one is a step too far. In fact, probably the bottom two. It’s why, on that scale of four, most agents in active use are at the first and second levels – especially in business.

The Importance of Trust

After considering our willingness to cede control to systems, we must trust the systems we create. More trust, more autonomy allowed. No trust, no autonomy. If generative AI enables us to make sense of unstructured data, there’s an enormous opportunity for enterprises and, indeed, society to transform at a scale we have never seen before. After all, 80% to 90% of the data we create is unstructured (emails, videos, audio, spreadsheets, etc.). After decades of trying to find ways to do this, we now have the computational ability to identify simple patterns in a complex world.

Obviously, as the autonomy increases, the amount of faith we place in the agent increases. The stakes rise, too. The risks and benefits? Move fast and break things. More agent independence means being able to move quickly and freeing up time for more impactful work. But with that comes the risk of breaking things, and sometimes not knowing what’s breaking. The sweet spot is when you can move quickly, remediate issues immediately, and your business can achieve efficiency gains with provable ROI. While agent autonomy improves productivity, it only happens if done correctly.

But it ultimately comes down to basic human perception about yielding control to the machines. That’s a complicated question that involves our inherent belief in these systems. The algorithms of AI large language models (LLMs) are often referred to as “black boxes.” Their probabilistic nature makes it impossible for us to understand how they come to specific decisions. And we’re not willing to fully trust what we don’t understand.

Beyond the lack of transparency, there’s the issue of AI models and agents making mistakes – also known as hallucinations. Although that makes them like fallible humans, we’re less inclined to cut digital entities any slack for errors.

When thinking about how much independence an agent should possess, ask yourself: When I give it the power to do this thing, is what I really want? And if I do, what questions should I ask myself before I build that agent? What are the trade-offs if I create an agent where I don’t check the work?

Perhaps most importantly, you want to know if this agent will be beneficial without compromising the company, customers, or employees.

Weighing the Risks

My takeaway message here is that people need to think about “what if” in a positive way, but they also need to consider the risks. There’s a balance. Agents are a dynamic system of orchestration. Creating them is not hard. The challenge is managing, governing, and securing them. Part of that is deciding how much agency you will allow them.

Agents are already becoming a part of our everyday and work lives. The time may not be far off when we do trust them with our health and finances. But as for flying my plane?

That will take more time.

Learn how Boomi Agentstudio can help your organization with AI agents.

English

English 日本語

日本語